Featured Projects

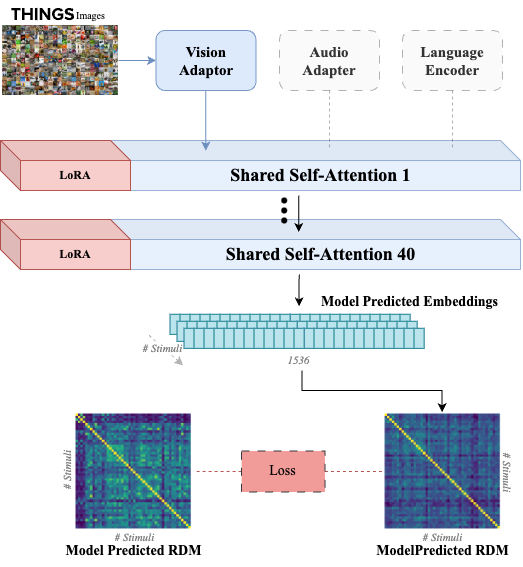

Crossmodal Human Enhancement of Multimodal AI

Human perception is inherently multisensory, with sensory modalities influencing one another. To develop more human-like multimodal AI models, it is essential to design systems that not only process multiple sensory inputs but also reflect their interconnections. In this study, we investigate the cross-modal interactions between vision and audition in large multimodal transformer models. Additionally, we fine-tune the visual processing of a state-of-the-art multimodal model using human visual behavioral embeddings

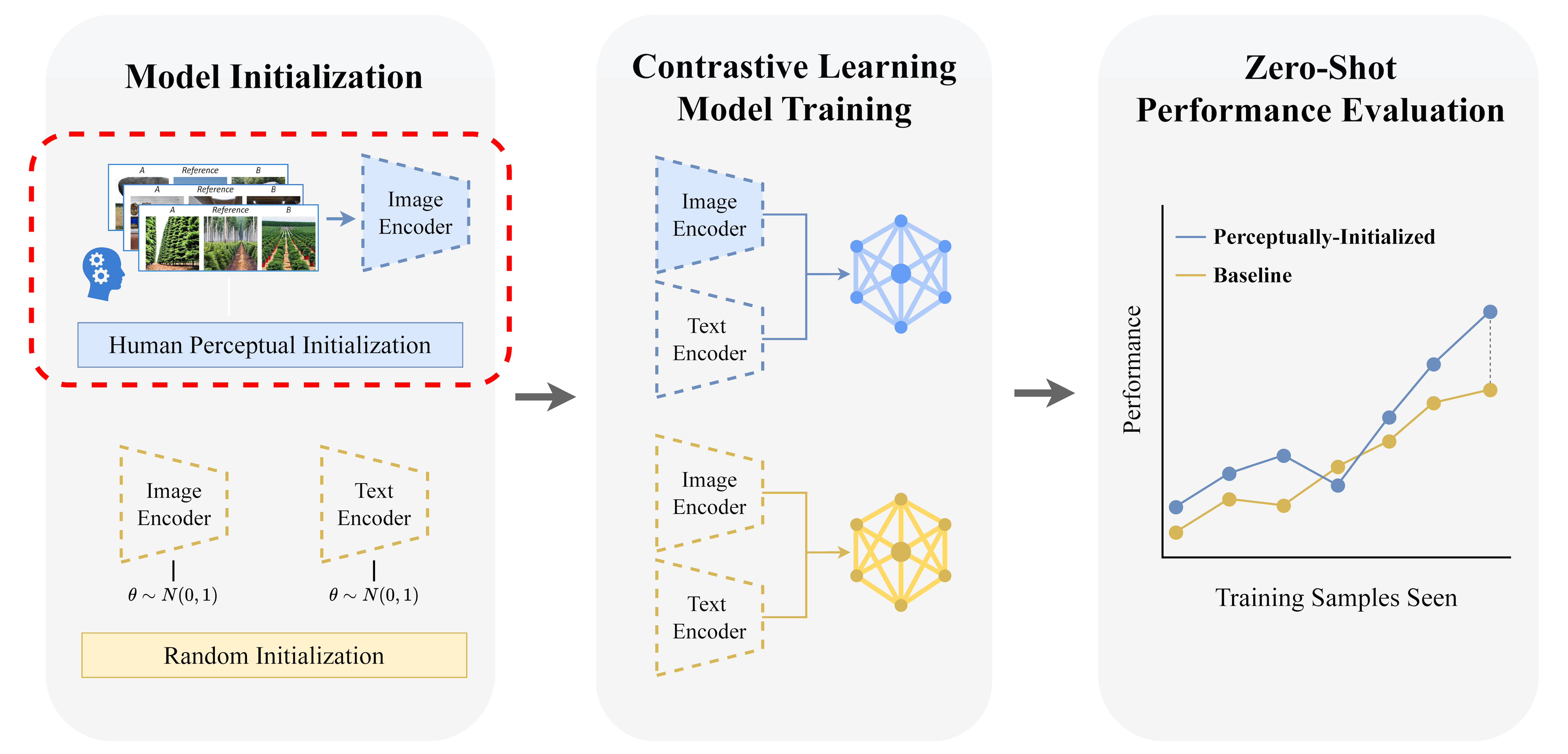

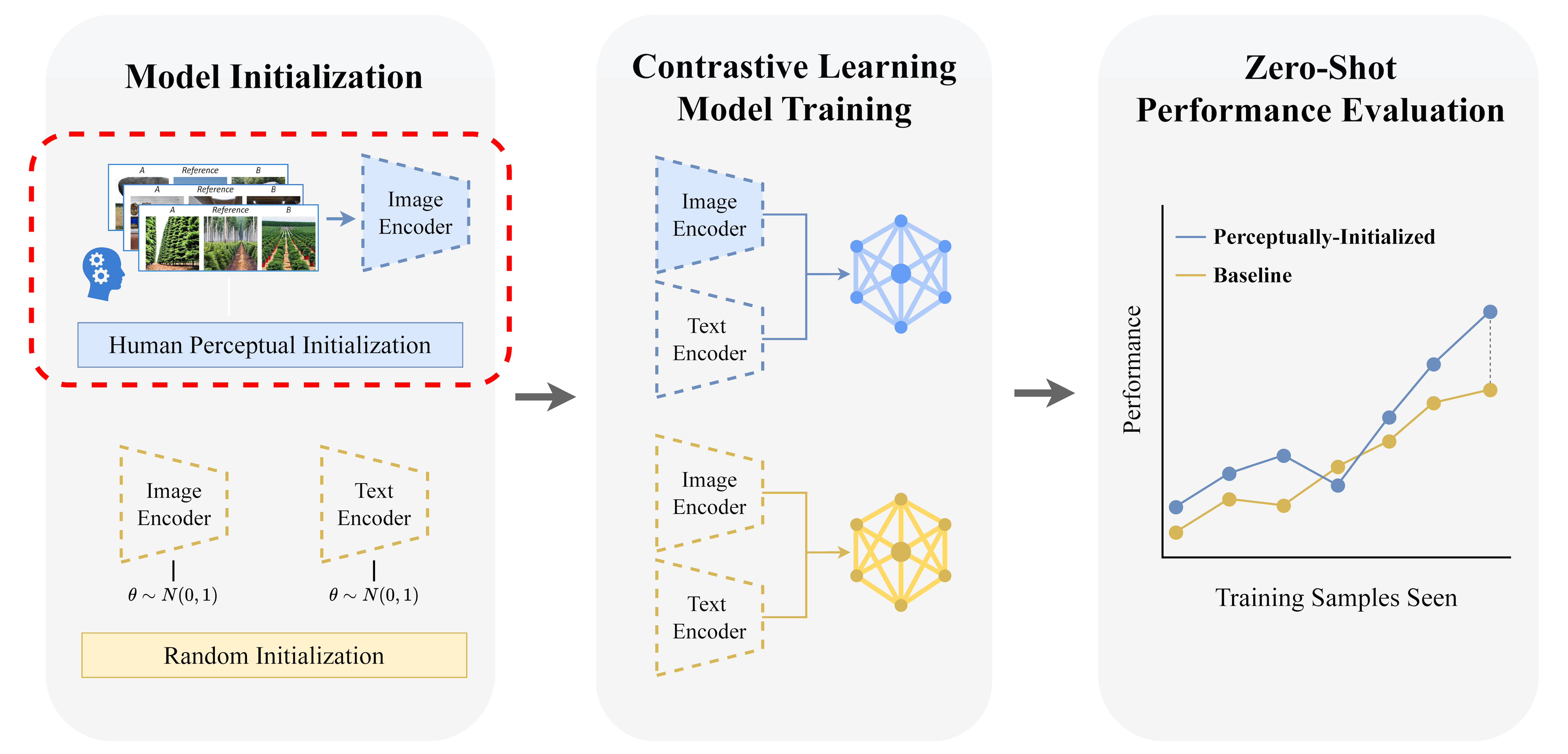

Perceptual-Initialization for Vision-Language Models

This project introduces Perceptual-Initialization (PI), a paradigm shift in visual representation learning that incorporates human perceptual structure during the initialization phase rather than as a downstream fine-tuning step. By integrating human-derived triplet embeddings from the NIGHTS dataset to initialize a CLIP vision encoder, followed by self-supervised learning, our approach demonstrates significant zero-shot performance improvements across multiple classification and retrieval benchmarks without any task-specific fine-tuning. Our findings challenge conventional wisdom by showing that embedding human perceptual structure during early representation learning yields more capable vision-language aligned systems that generalize immediately to unseen tasks.

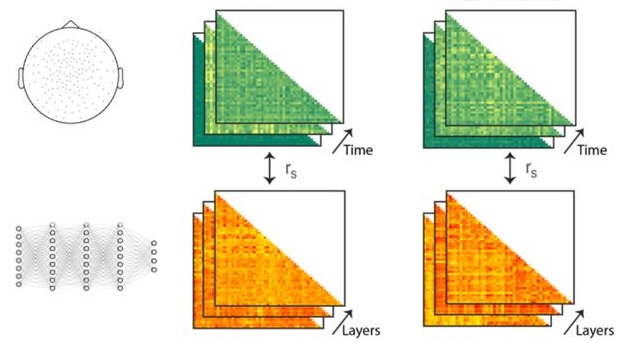

Personalized Brain-Inspired AI Models (CLIP-Human Based Analysis)

We introduced personalized brain-inspired AI models by integrating human behavioral embeddings and neural data to better align artificial intelligence with cognitive processes. The study fine-tunes multimodal state-of-the-art AI model (CLIP) in a stepwise manner—first using large-scale behavioral decisions, then group-level neural data, and finally, participant-specific neural dynamics—enhancing both behavioral and neural alignment. The results demonstrate the potential for individualized AI systems, capable of capturing unique neural representations, with applications spanning medicine, cognitive research, and human-computer interfaces.

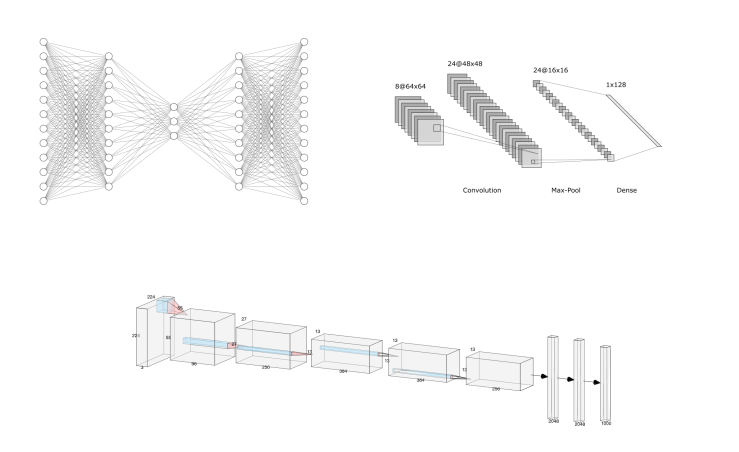

Brain Inspired Hybrid Architectural Designs

We leverage activation spaces from diverse neural network architectures to identify optimal combinations of network processing that functionally emulate brain activity. By systematically exploring and aligning these internal representations with neural responses to sensory stimuli, this approach aims to discover novel, hybrid architectures capable of modeling the brain's complex computational principles

Representational Similarity Analysis (RSA) GUI

This project develops an intuitive graphical user interface (GUI) to facilitate the alignment and comparison of representational structures across diverse AI models and biological systems. Utilizing Representational Similarity Analysis (RSA), the tool constructs and analyzes Representational Dissimilarity Matrices (RDMs) to identify correspondences between different systems. It incorporates multiple alignment metrics, including Spearman correlation, Centered Kernel Alignment (CKA), Procrustes analysis, and optimization techniques such as ridge regression and Bayesian methods. By enabling researchers to easily compare and visualize representations, the GUI helps uncover shared and unique patterns of information encoding across models and biological data. This approach significantly simplifies complex representational comparisons, bridging machine learning and cognitive neuroscience. Ultimately, the RSA GUI serves as a versatile platform for exploring how information structures align across artificial and biological representations.

Audiovisual Object Embeddings over Development

This project investigates how visual and auditory semantic embeddings evolve over the course of development, driven by sensory experiences and learning. The research focuses specifically on how distinct visual and auditory representations independently develop and mature into adult-like semantic spaces. Using behavioral experiments, computational modeling, and neural validation through fMRI and MEG, we aim to characterize developmental trajectories and pinpoint how sensory experience shapes these semantic embeddings. Experiments will utilize real-world stimuli to explore the plasticity of semantic representations. Insights from this project will deepen our understanding of cognitive development

About The Tovar Brain-Inspired AI Lab

The focus of our work lies in understanding intelligence, both artificial and natural, through the development of AI models that capture cognitive processes. In building these models, our aim is to gain mechanistic insights into cognitive disorders that have historically been difficult to understand.

Given that human sensory processing spans multiple modalities, our work investigates the integration of multimodal signals across senses while observing neural, behavioral, and physiological outputs. In addition to processing these diverse signals, we are interested in how the senses come together, studying how multisensory integration in models parallels biological sensory integration.

This has led to work that focuses on refining models through cross-modal fine-tuning, where training on one type of data improves performance and human alignment in another modality, offering new perspectives on sensory integration and brain plasticity. Ultimately, our goal is to develop scalable, generalizable models that reflect human cognitive diversity, helping in the understanding and treatment of neurological conditions while bridging the gap between theoretical neuroscience and real-world clinical applications.

Latest News

Nick joins the Tovar lab

Nick is an undergraduate student in Biomedical Engineering and will be working on CLIP-based neural alignment with neural spikes and local field poten...

Clara joins the Tovar lab

Clara is a junior pursuing a double major in Computer Science and Mathematics and will be working on crossmodal AI enhancement, image quality analysis...

Perceptual-Initialization Research Published on arXiv

This work represents a shift in visual representation learning incorporating human perceptual structure during initialization rather than as a downstr...