About

My interests lie in the intersection between healthcare and technology with deep experiences in web development, software design, and data analysis. You can find more about what me and what I'm doing here: https://justinkong.info/.

Research Interests

Current Research Projects

LLM: Story, Image, and Audio Generation for Developmental Cognition and Linguistics

This project investigates how development and immersion impact memory retention and cognitive processes. Participants of different ages will recall stories presented in various immersive environments to assess the influence of these factors on learning. Additionally, we will prompt large language models (LLMs) with personas representing different age groups to generate retellings of the same stories. By comparing these AI-generated narratives to human retellings, we aim to evaluate the alignment of LLM outputs with human cognitive patterns across developmental stages. This approach will provide insights into the capabilities of LLMs in modeling age-specific linguistic and cognitive behaviors.

Influence of Immersion, Congruence, and Modality on Sensory Encoding and Memory

This research investigates how immersive environments, the congruence between sensory modalities, and the mode of information presentation affect sensory encoding and memory retention. Participants across various age groups will engage in storytelling tasks within virtual reality settings that vary in environmental congruence and modality (visual, auditory, or multimodal). We will analyze the recorded retellings for speech dimensions such as intonation, tone, pitch, and semantic structure to quantitatively assess narrative patterns. The findings aim to elucidate how immersion, congruence, and modality influence sensory encoding and memory, informing the design of educational tools and therapeutic interventions.

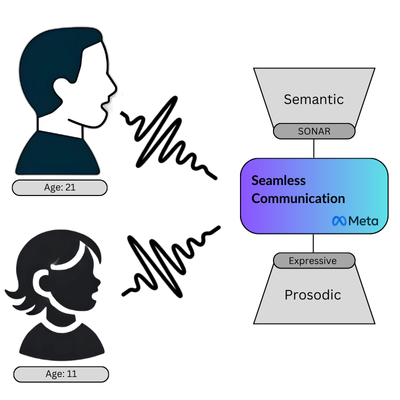

Speech-to-Sentiment Pipeline for Analyzing Semantic and Expressive Changes Across the Lifespan

This project aims to develop an accessible pipeline and user interface that converts spoken language into sentiment and semantic analyses. The initial application will investigate how semantics and expressivity in speech evolve with age. By processing speech inputs to extract prosodic features—such as intonation, tone, and pitch—and semantic content, we will generate representational embeddings that encapsulate both the meaning and emotional nuances of spoken language. Utilizing multimodal models, the pipeline will facilitate the comparison of these embeddings across different age groups, providing insights into the developmental trajectory of speech characteristics throughout the human lifespan.