About

Aspiring Data Scientist, Artificial Intelligence Researcher, Computational Neuroscientist.

Research Interests

Current Research Projects

Personalized Brain-Inspired AI Models (CLIP-Human Based Analysis)

We introduced personalized brain-inspired AI models by integrating human behavioral embeddings and neural data to better align artificial intelligence with cognitive processes. The study fine-tunes multimodal state-of-the-art AI model (CLIP) in a stepwise manner—first using large-scale behavioral decisions, then group-level neural data, and finally, participant-specific neural dynamics—enhancing both behavioral and neural alignment. The results demonstrate the potential for individualized AI systems, capable of capturing unique neural representations, with applications spanning medicine, cognitive research, and human-computer interfaces.

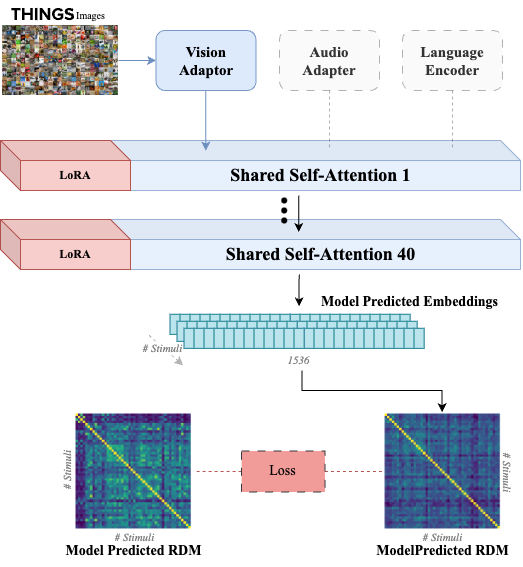

Crossmodal Human Enhancement of Multimodal AI

Human perception is inherently multisensory, with sensory modalities influencing one another. To develop more human-like multimodal AI models, it is essential to design systems that not only process multiple sensory inputs but also reflect their interconnections. In this study, we investigate the cross-modal interactions between vision and audition in large multimodal transformer models. Additionally, we fine-tune the visual processing of a state-of-the-art multimodal model using human visual behavioral embeddings

Investigating the Emergence of Complexity from the Dimensional Structure of Mental Representations

Visual complexity significantly influences our perception and cognitive processing of stimuli, yet its quantification remains challenging. This project explores two computational approaches to address this issue. First, we employ the CLIP-HBA model, which integrates pre-trained CLIP embeddings with human behavioral data, to decompose objects into constituent dimensions and derive personalized complexity metrics aligned with human perception. Second, we directly prompt AI models to evaluate specific complexity attributes, such as crowding and patterning, enabling the assessment of distinct complexity qualities without relying on human-aligned embeddings. By comparing the predictive power of these models through optimization and cross-validation, we aim to discern the unique aspects of visual complexity each captures, thereby enhancing our understanding of how complexity affects perception and informing the development of more effective visual communication strategies.

Brain Inspired AI Across Levels of Neural Processing

Leveraging our success in refining AI using human behavior and brain activity, we combined human brain scans (fMRI, MEG) with electrical recordings from monkeys to develop computational models of perception. By comparing different types of brain signals—from fast neural activity to broader patterns seen in fMRI brain imaging—we aim to identify fundamental perceptual principles. This work will help create AI that more closely mimics how the brain processes information at different levels that can be compiled into ensembles and mixtures of experts.

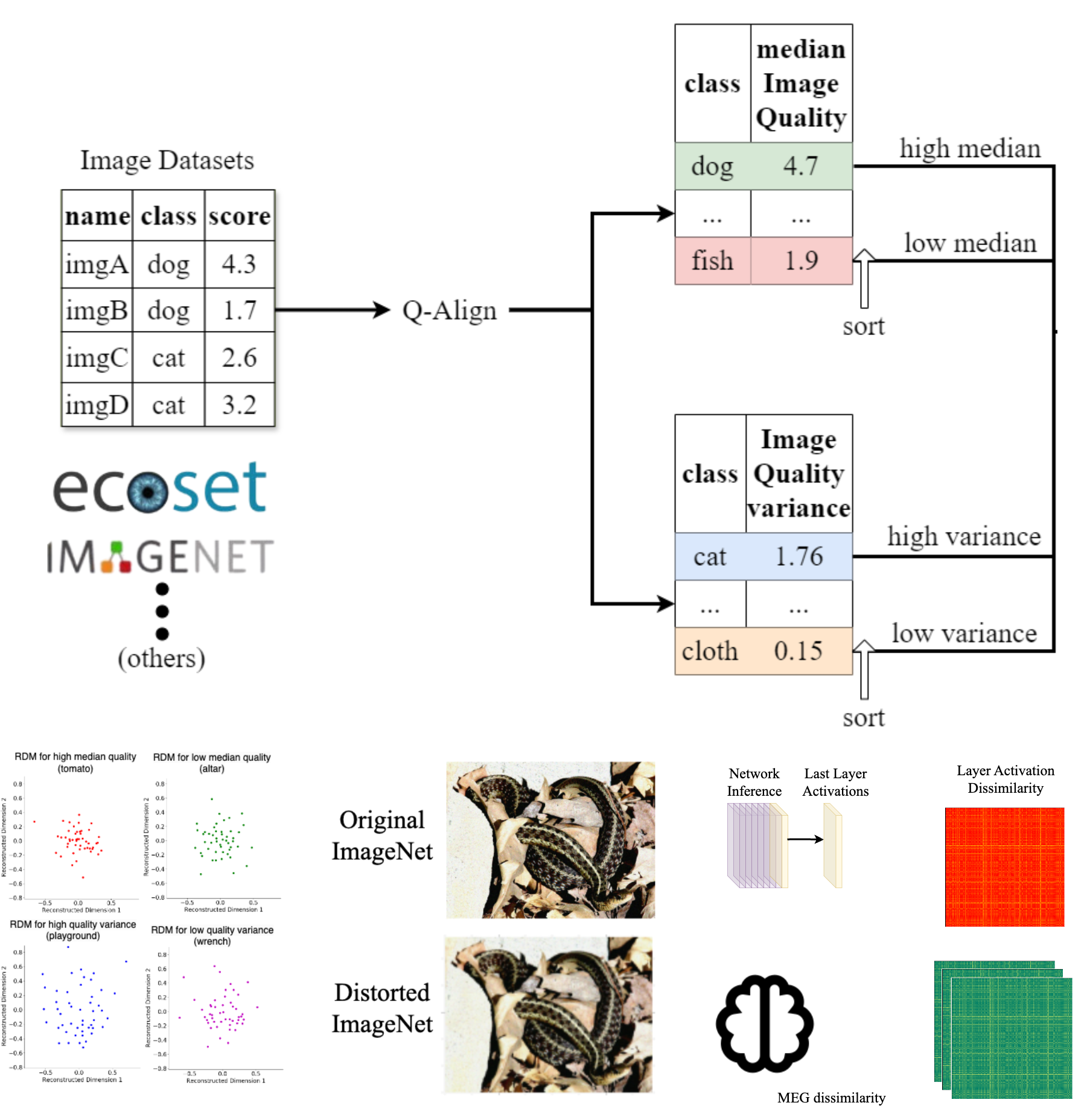

Image Quality and Neural Networks

Training data encompasses inherent biases, and it is often not immediately clear what constitutes good or bad training data with respect to these biases. Among such biases is image quality for visual datasets, which is multifaceted, involving aspects such as blur, noise, and resolution. In this study, we investigate how different aspects of image quality and its variance within training datasets affect neural network performance and their alignment with human neural representations. By analyzing large-scale image datasets using advanced image quality metrics, we categorize images based on diverse quality factors and their variances.

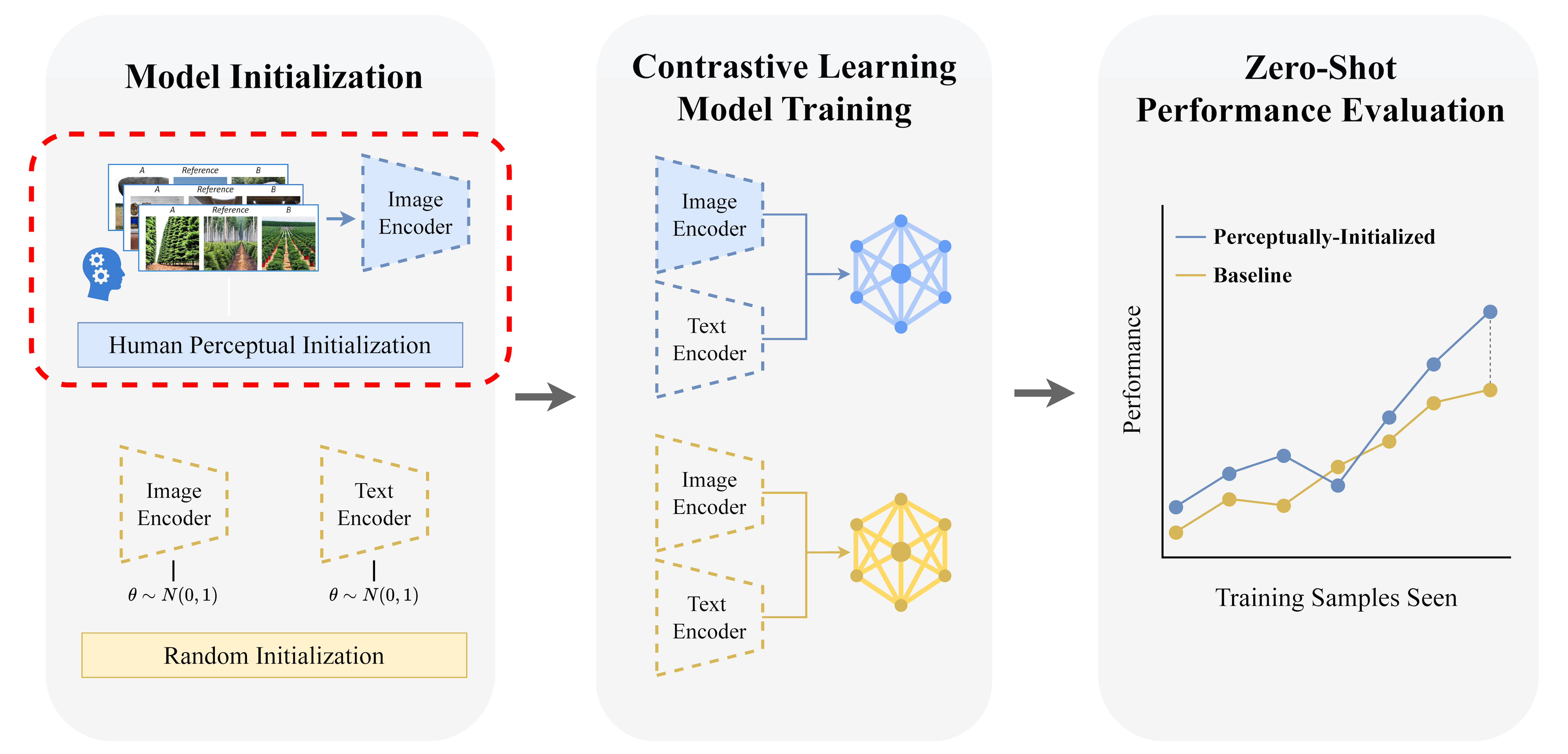

Perceptual-Initialization for Vision-Language Models

This project introduces Perceptual-Initialization (PI), a paradigm shift in visual representation learning that incorporates human perceptual structure during the initialization phase rather than as a downstream fine-tuning step. By integrating human-derived triplet embeddings from the NIGHTS dataset to initialize a CLIP vision encoder, followed by self-supervised learning, our approach demonstrates significant zero-shot performance improvements across multiple classification and retrieval benchmarks without any task-specific fine-tuning. Our findings challenge conventional wisdom by showing that embedding human perceptual structure during early representation learning yields more capable vision-language aligned systems that generalize immediately to unseen tasks.

Semantic Binding Windows

This project investigates the concept of a semantic binding window—the range within latent semantic spaces in which visual and auditory stimuli are perceptually integrated. Drawing parallels to the temporal binding window observed in multisensory integration, we posit that semantic representations also have a spatial or latent semantic threshold for integration. Through behavioral experiments and computational modeling, we will construct multimodal embeddings to examine how visual and auditory stimuli traverse latent semantic spaces. Neural validation using fMRI and MEG data will further elucidate the alignment and interaction of these embeddings.

Audiovisual Object Embeddings over Development

This project investigates how visual and auditory semantic embeddings evolve over the course of development, driven by sensory experiences and learning. The research focuses specifically on how distinct visual and auditory representations independently develop and mature into adult-like semantic spaces. Using behavioral experiments, computational modeling, and neural validation through fMRI and MEG, we aim to characterize developmental trajectories and pinpoint how sensory experience shapes these semantic embeddings. Experiments will utilize real-world stimuli to explore the plasticity of semantic representations. Insights from this project will deepen our understanding of cognitive development

Recent Publications

- Hu, Y., Wang, R., Zhao, S. C., Zhan, X., Kim, D. H., Wallace, M., & Tovar, D. A. (2025). Beginning with You: Perceptual-Initialization Improves Vision-Language Representation and Alignment. arXiv. https://arxiv.org/abs/2505.14204

- Zhao, S. C., Hu, Y., Lee, J., Bender, A., Mazumdar, T., Wallace, M., & Tovar, D. A. (2025). Shifting attention to you: Personalized brain-inspired AI models. arXiv. https://arxiv.org/abs/2502.04658

- Chatterjee, C., Petulante, A., Jani, K., Spencer-Smith, J., Hu, Y., Lau, R., Fu, H., Hoang, T., Zhao, S. C., & Deshmukh, S. (2024). Pre-trained audio transformer as a foundational AI tool for gravitational waves. arXiv. https://arxiv.org/abs/2412.20789

- Zhao, S. C., Lee, J., Bender, A., Mazumdar, T., Leong, A., Nkrumah, P. O., Wallace, M., & Tovar, D. A. (2024). Brain-inspired embedding model: Scaling and perceptual fine-tuning. Proceedings of the Cognitive Computational Neuroscience Conference.

- Lee, J., Nkrumah, P. O., Zhao, S. C., Quackenbush, W. J., Leong, A., Mazumdar, T., Wallace, M., & Tovar, D. A. (2024). The role of image quality in shaping neural network representations and performance. Proceedings of the Cognitive Computational Neuroscience Conference.