Research Projects

Crossmodal Human Enhancement of Multimodal AI

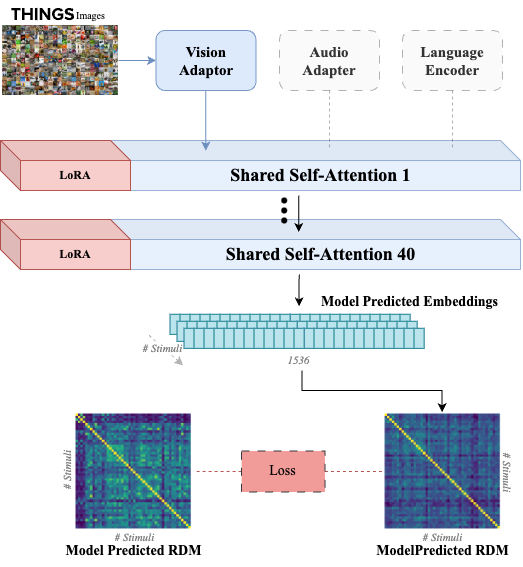

Human perception is inherently multisensory, with sensory modalities influencing one another. To develop more human-like multimodal AI models, it is essential to design systems that not only process multiple sensory inputs but also reflect their interconnections. In this study, we investigate the cross-modal interactions between vision and audition in large multimodal transformer models. Additionally, we fine-tune the visual processing of a state-of-the-art multimodal model using human visual behavioral embeddings

Team Members:

Image Quality and Neural Networks

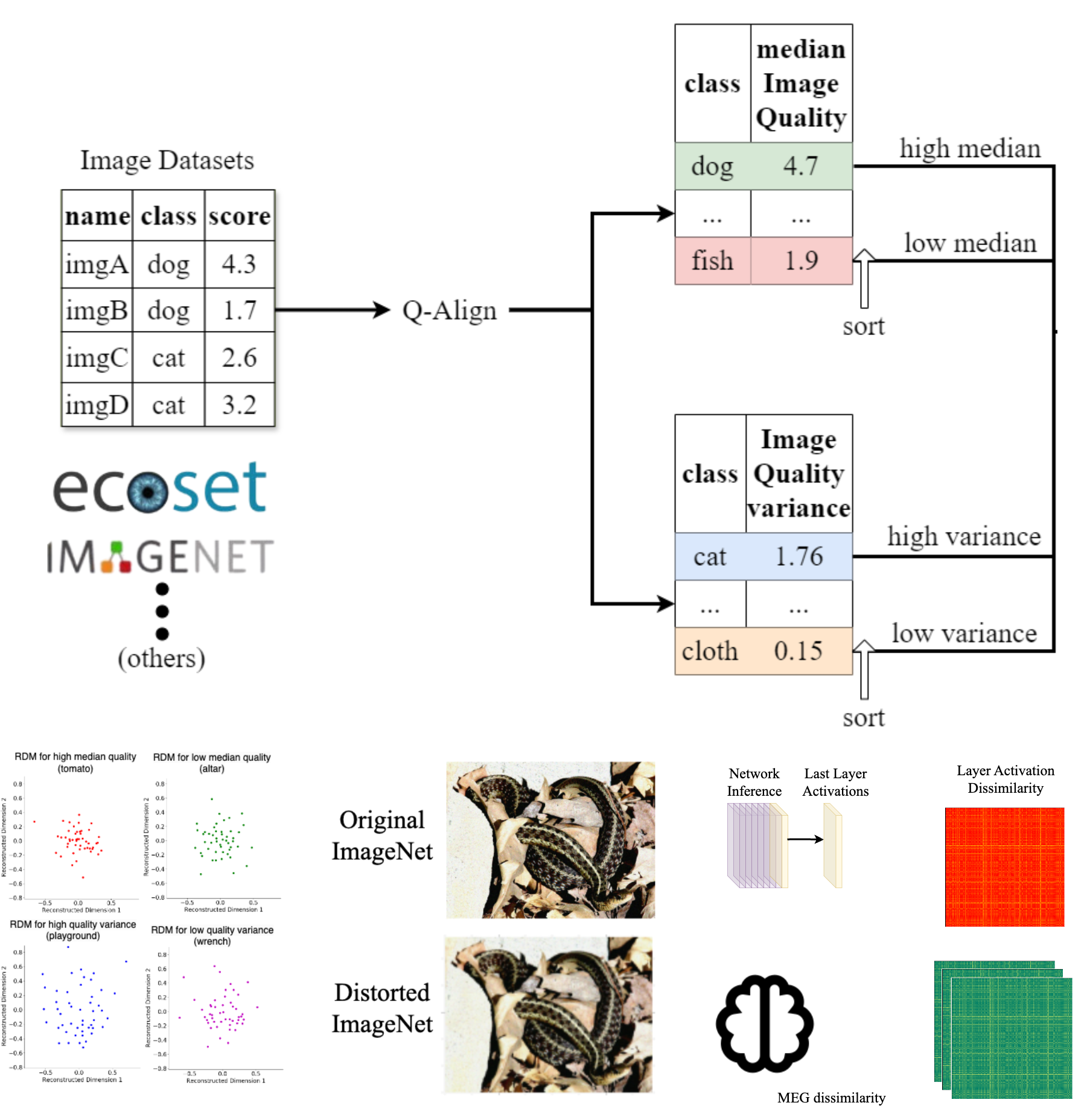

Training data encompasses inherent biases, and it is often not immediately clear what constitutes good or bad training data with respect to these biases. Among such biases is image quality for visual datasets, which is multifaceted, involving aspects such as blur, noise, and resolution. In this study, we investigate how different aspects of image quality and its variance within training datasets affect neural network performance and their alignment with human neural representations. By analyzing large-scale image datasets using advanced image quality metrics, we categorize images based on diverse quality factors and their variances.

Team Members:

Investigating the Emergence of Complexity from the Dimensional Structure of Mental Representations

Visual complexity significantly influences our perception and cognitive processing of stimuli, yet its quantification remains challenging. This project explores two computational approaches to address this issue. First, we employ the CLIP-HBA model, which integrates pre-trained CLIP embeddings with human behavioral data, to decompose objects into constituent dimensions and derive personalized complexity metrics aligned with human perception. Second, we directly prompt AI models to evaluate specific complexity attributes, such as crowding and patterning, enabling the assessment of distinct complexity qualities without relying on human-aligned embeddings. By comparing the predictive power of these models through optimization and cross-validation, we aim to discern the unique aspects of visual complexity each captures, thereby enhancing our understanding of how complexity affects perception and informing the development of more effective visual communication strategies.

Team Members:

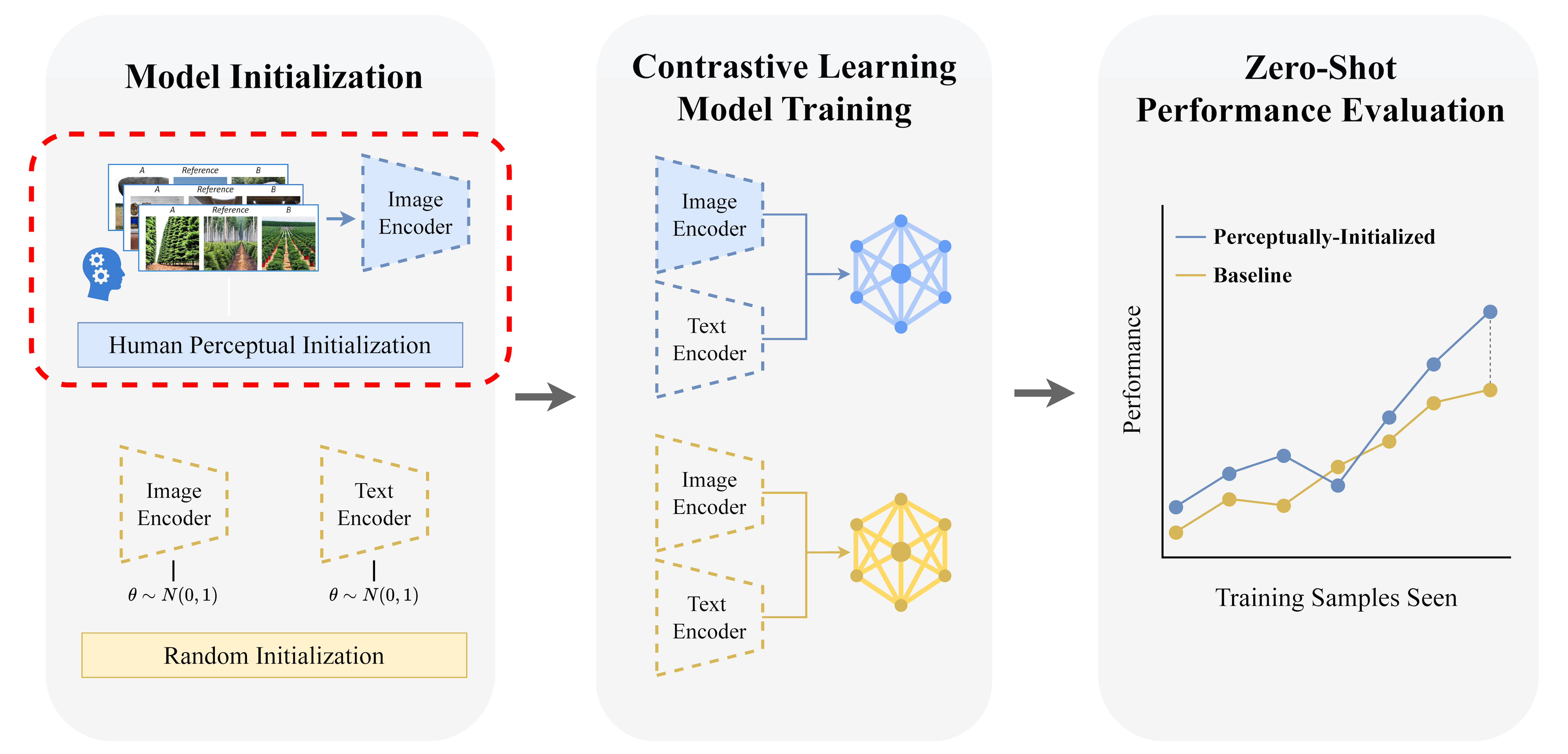

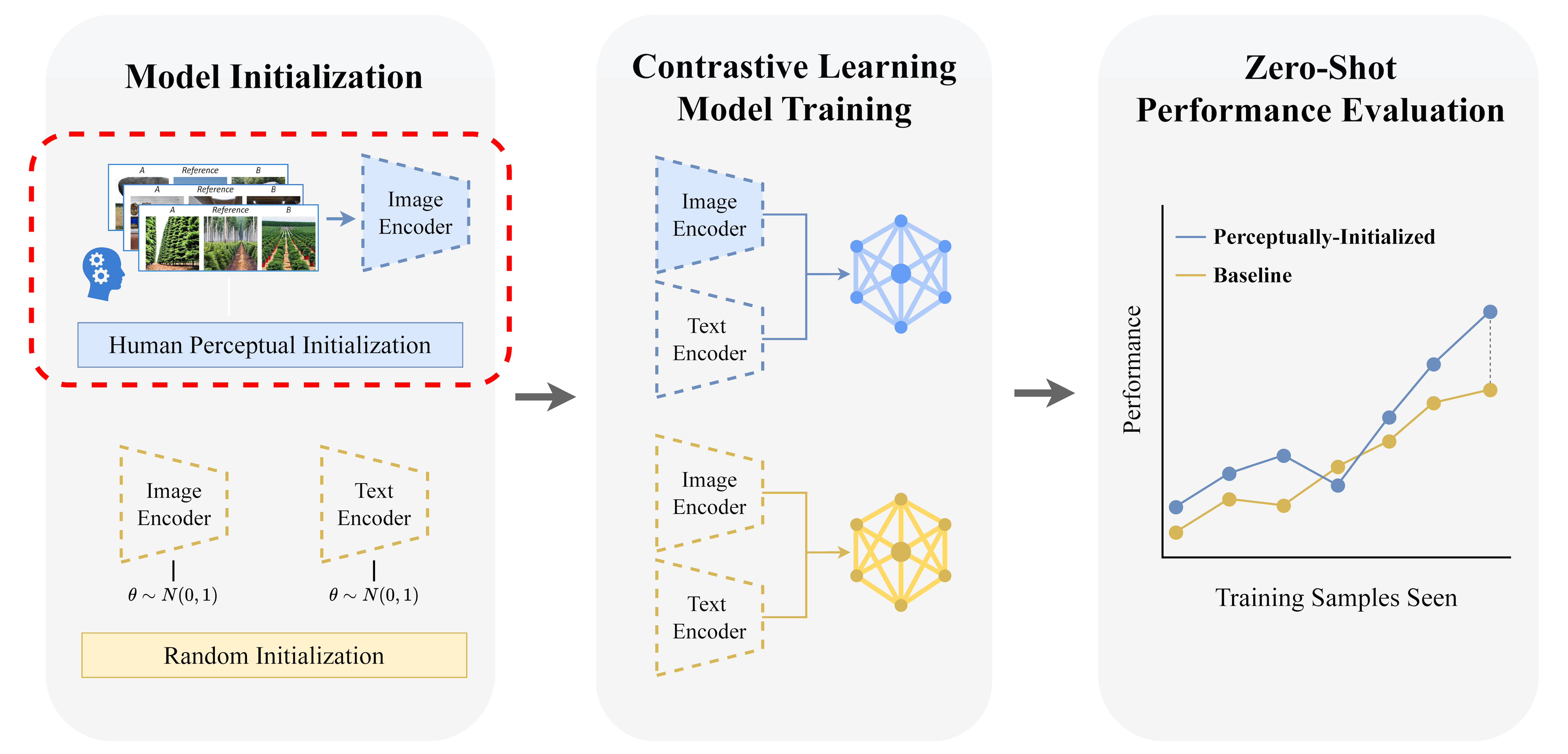

Perceptual-Initialization for Vision-Language Models

This project introduces Perceptual-Initialization (PI), a paradigm shift in visual representation learning that incorporates human perceptual structure during the initialization phase rather than as a downstream fine-tuning step. By integrating human-derived triplet embeddings from the NIGHTS dataset to initialize a CLIP vision encoder, followed by self-supervised learning, our approach demonstrates significant zero-shot performance improvements across multiple classification and retrieval benchmarks without any task-specific fine-tuning. Our findings challenge conventional wisdom by showing that embedding human perceptual structure during early representation learning yields more capable vision-language aligned systems that generalize immediately to unseen tasks.

Team Members:

Personalized Brain-Inspired AI Models (CLIP-Human Based Analysis)

We introduced personalized brain-inspired AI models by integrating human behavioral embeddings and neural data to better align artificial intelligence with cognitive processes. The study fine-tunes multimodal state-of-the-art AI model (CLIP) in a stepwise manner—first using large-scale behavioral decisions, then group-level neural data, and finally, participant-specific neural dynamics—enhancing both behavioral and neural alignment. The results demonstrate the potential for individualized AI systems, capable of capturing unique neural representations, with applications spanning medicine, cognitive research, and human-computer interfaces.

Team Members:

Brain Inspired Hybrid Architectural Designs

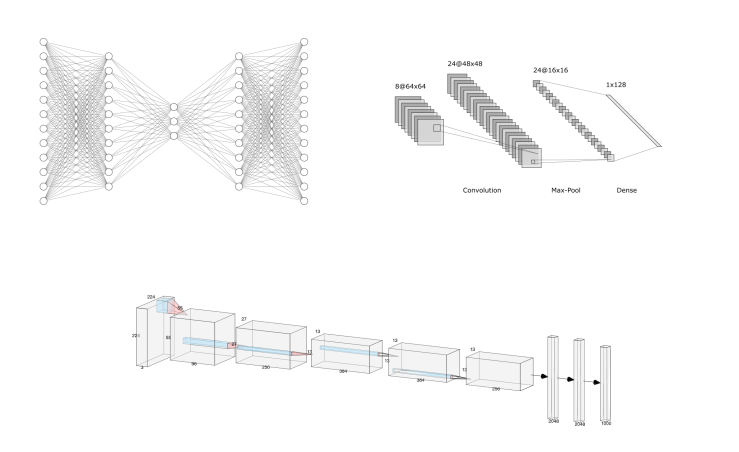

We leverage activation spaces from diverse neural network architectures to identify optimal combinations of network processing that functionally emulate brain activity. By systematically exploring and aligning these internal representations with neural responses to sensory stimuli, this approach aims to discover novel, hybrid architectures capable of modeling the brain's complex computational principles

Team Members:

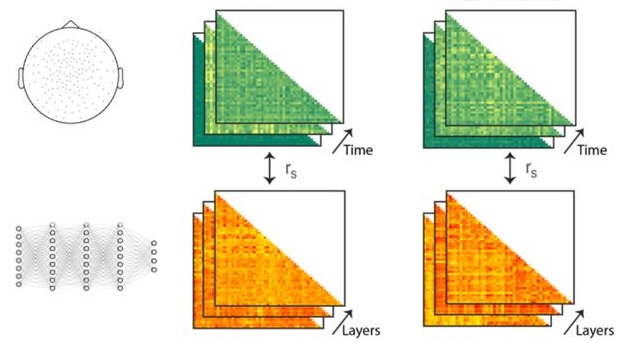

Representational Similarity Analysis (RSA) GUI

This project develops an intuitive graphical user interface (GUI) to facilitate the alignment and comparison of representational structures across diverse AI models and biological systems. Utilizing Representational Similarity Analysis (RSA), the tool constructs and analyzes Representational Dissimilarity Matrices (RDMs) to identify correspondences between different systems. It incorporates multiple alignment metrics, including Spearman correlation, Centered Kernel Alignment (CKA), Procrustes analysis, and optimization techniques such as ridge regression and Bayesian methods. By enabling researchers to easily compare and visualize representations, the GUI helps uncover shared and unique patterns of information encoding across models and biological data. This approach significantly simplifies complex representational comparisons, bridging machine learning and cognitive neuroscience. Ultimately, the RSA GUI serves as a versatile platform for exploring how information structures align across artificial and biological representations.

Team Members:

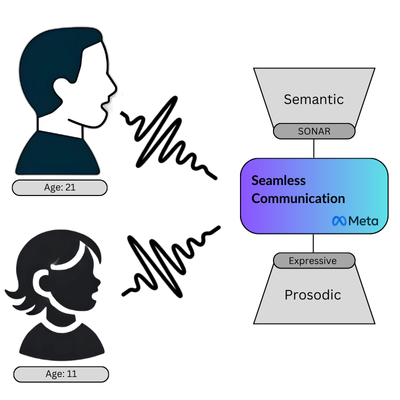

Speech-to-Sentiment Pipeline for Analyzing Semantic and Expressive Changes Across the Lifespan

This project aims to develop an accessible pipeline and user interface that converts spoken language into sentiment and semantic analyses. The initial application will investigate how semantics and expressivity in speech evolve with age. By processing speech inputs to extract prosodic features—such as intonation, tone, and pitch—and semantic content, we will generate representational embeddings that encapsulate both the meaning and emotional nuances of spoken language. Utilizing multimodal models, the pipeline will facilitate the comparison of these embeddings across different age groups, providing insights into the developmental trajectory of speech characteristics throughout the human lifespan.

Team Members:

Audiovisual Object Embeddings over Development

This project investigates how visual and auditory semantic embeddings evolve over the course of development, driven by sensory experiences and learning. The research focuses specifically on how distinct visual and auditory representations independently develop and mature into adult-like semantic spaces. Using behavioral experiments, computational modeling, and neural validation through fMRI and MEG, we aim to characterize developmental trajectories and pinpoint how sensory experience shapes these semantic embeddings. Experiments will utilize real-world stimuli to explore the plasticity of semantic representations. Insights from this project will deepen our understanding of cognitive development

Team Members:

Brain Inspired AI Across Levels of Neural Processing

Leveraging our success in refining AI using human behavior and brain activity, we combined human brain scans (fMRI, MEG) with electrical recordings from monkeys to develop computational models of perception. By comparing different types of brain signals—from fast neural activity to broader patterns seen in fMRI brain imaging—we aim to identify fundamental perceptual principles. This work will help create AI that more closely mimics how the brain processes information at different levels that can be compiled into ensembles and mixtures of experts.

Team Members:

Influence of Immersion, Congruence, and Modality on Sensory Encoding and Memory

This research investigates how immersive environments, the congruence between sensory modalities, and the mode of information presentation affect sensory encoding and memory retention. Participants across various age groups will engage in storytelling tasks within virtual reality settings that vary in environmental congruence and modality (visual, auditory, or multimodal). We will analyze the recorded retellings for speech dimensions such as intonation, tone, pitch, and semantic structure to quantitatively assess narrative patterns. The findings aim to elucidate how immersion, congruence, and modality influence sensory encoding and memory, informing the design of educational tools and therapeutic interventions.

Team Members:

LLM: Story, Image, and Audio Generation for Developmental Cognition and Linguistics

This project investigates how development and immersion impact memory retention and cognitive processes. Participants of different ages will recall stories presented in various immersive environments to assess the influence of these factors on learning. Additionally, we will prompt large language models (LLMs) with personas representing different age groups to generate retellings of the same stories. By comparing these AI-generated narratives to human retellings, we aim to evaluate the alignment of LLM outputs with human cognitive patterns across developmental stages. This approach will provide insights into the capabilities of LLMs in modeling age-specific linguistic and cognitive behaviors.

Team Members:

Multimodal Learning Plasticity over Development

This project investigates the development of multimodal learning plasticity across different developmental stages. By employing novel stimuli with arbitrary audiovisual pairings, we will conduct both passive and active learning sessions including within immersive environments to assess how effectively these associations are retained over time. Through behavioral experiments and electroencephalography (EEG), we aim to characterize the developmental trajectory of multimodal learning and identify critical periods for such plasticity. The findings will provide insights into how sensory experiences shape cognitive development and inform applications in education and neurodevelopmental research.

Team Members:

Personalized Representational Connectivity with fMRI-Guided CLIP

Human visual perception emerges from coordinated activity across many interconnected brain regions. To develop AI models that better reflect this biological organization, we fine-tuned CLIP to predict both the representational structure within individual visual cortical areas and the functional connectivity patterns linking them. Using lightweight model adaptations—such as layer-specific feature reweighting and low-rank personalization—the model successfully captured individual differences in neural processing across 14 visual regions. Additionally, the fMRI-aligned models achieved zero-shot generalization to MEG dynamics, demonstrating a pathway toward personalized and biologically grounded multimodal AI systems.

Team Members:

Probing Critical Periods in Model Learning

Investigating learning dynamics of models using disruptive perturbations at various training stages to identify critical periods that significantly influence model performance and representation formation. By applying targeted disruptions during training, we aim to understand how these interventions affect learning trajectories, generalization capabilities, and the development of internal representations. This research seeks to uncover parallels between artificial learning processes and biological critical periods, providing insights into optimizing training protocols for enhanced model robustness and adaptability.

Team Members:

Semantic Binding Windows

This project investigates the concept of a semantic binding window—the range within latent semantic spaces in which visual and auditory stimuli are perceptually integrated. Drawing parallels to the temporal binding window observed in multisensory integration, we posit that semantic representations also have a spatial or latent semantic threshold for integration. Through behavioral experiments and computational modeling, we will construct multimodal embeddings to examine how visual and auditory stimuli traverse latent semantic spaces. Neural validation using fMRI and MEG data will further elucidate the alignment and interaction of these embeddings.

Team Members:

The Brain Initialization Project

This project is coninuing the perceptual initialization project's findings, but using neural signals to guide the initialization of AI models, replacing random seeds with fMRI ROIs. One of the goals of this project is to find if different ROIs differentially improve model performance.