About

I am a first-year PhD student in Biomedical Engineering with a background in neuroscience. I am passionate about applying scientific insights to real-world challenges and am particularly interested in industry opportunities where I can bridge research and innovation.

Research Interests

Current Research Projects

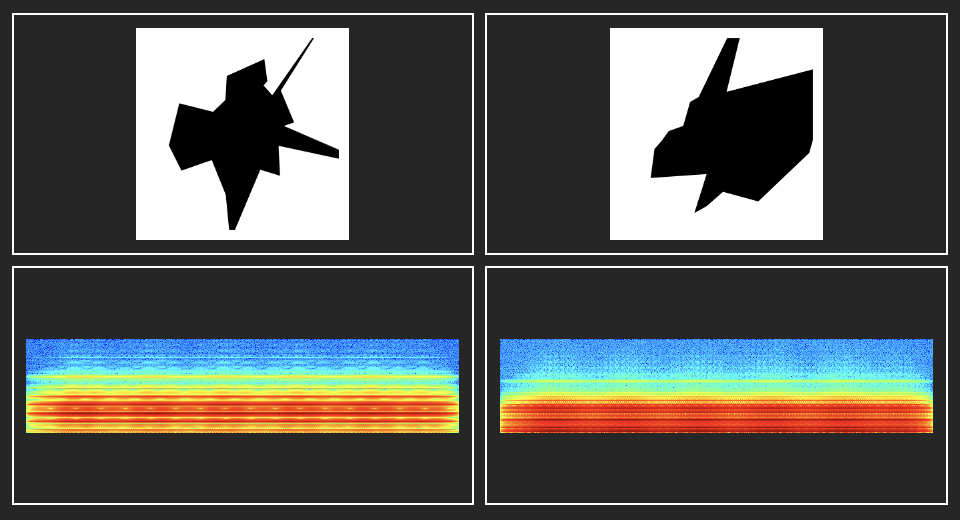

Semantic Binding Windows

This project investigates the concept of a semantic binding window—the range within latent semantic spaces in which visual and auditory stimuli are perceptually integrated. Drawing parallels to the temporal binding window observed in multisensory integration, we posit that semantic representations also have a spatial or latent semantic threshold for integration. Through behavioral experiments and computational modeling, we will construct multimodal embeddings to examine how visual and auditory stimuli traverse latent semantic spaces. Neural validation using fMRI and MEG data will further elucidate the alignment and interaction of these embeddings.

Audiovisual Object Embeddings over Development

This project investigates how visual and auditory semantic embeddings evolve over the course of development, driven by sensory experiences and learning. The research focuses specifically on how distinct visual and auditory representations independently develop and mature into adult-like semantic spaces. Using behavioral experiments, computational modeling, and neural validation through fMRI and MEG, we aim to characterize developmental trajectories and pinpoint how sensory experience shapes these semantic embeddings. Experiments will utilize real-world stimuli to explore the plasticity of semantic representations. Insights from this project will deepen our understanding of cognitive development

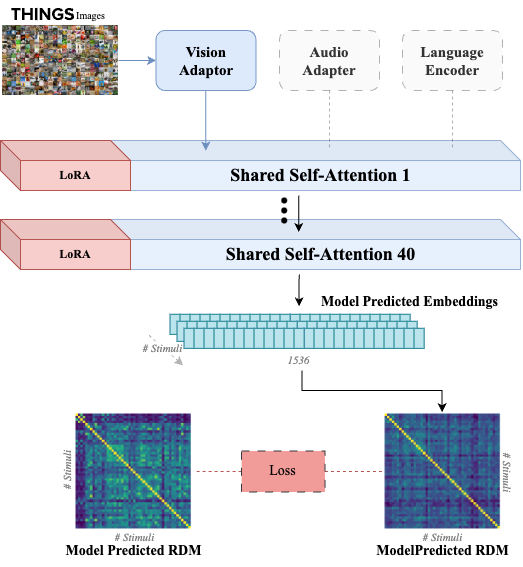

Crossmodal Human Enhancement of Multimodal AI

Human perception is inherently multisensory, with sensory modalities influencing one another. To develop more human-like multimodal AI models, it is essential to design systems that not only process multiple sensory inputs but also reflect their interconnections. In this study, we investigate the cross-modal interactions between vision and audition in large multimodal transformer models. Additionally, we fine-tune the visual processing of a state-of-the-art multimodal model using human visual behavioral embeddings

Brain Inspired AI Across Levels of Neural Processing

Leveraging our success in refining AI using human behavior and brain activity, we combined human brain scans (fMRI, MEG) with electrical recordings from monkeys to develop computational models of perception. By comparing different types of brain signals—from fast neural activity to broader patterns seen in fMRI brain imaging—we aim to identify fundamental perceptual principles. This work will help create AI that more closely mimics how the brain processes information at different levels that can be compiled into ensembles and mixtures of experts.

Multimodal Learning Plasticity over Development

This project investigates the development of multimodal learning plasticity across different developmental stages. By employing novel stimuli with arbitrary audiovisual pairings, we will conduct both passive and active learning sessions including within immersive environments to assess how effectively these associations are retained over time. Through behavioral experiments and electroencephalography (EEG), we aim to characterize the developmental trajectory of multimodal learning and identify critical periods for such plasticity. The findings will provide insights into how sensory experiences shape cognitive development and inform applications in education and neurodevelopmental research.